Note: This article first appeared on the Caucasus Data Blog, a joint effort of CRRC-Georgia and OC Media. It was written by Dustin Gilbreath, a Non-Resident Senior Fellow at CRRC-Georgia. The views presented in the article are the author’s alone and do not necessarily represent the views of CRRC-Georgia, Caucasian House, or any related entity.

The effects of climate change are increasingly being felt acutely in Georgia. A CRRC poll investigated Georgian people’s perception of climate change, and found that 90% of respondents considered it to be an important issue, and 75% had experienced changes in local weather patterns.

Climate change is increasingly having catastrophic impacts around the world, from an increase in insect-borne infectious disease to a rise in deadly heatwaves, flooding, and storms.

These impacts are also being felt in Georgia, with unpredictable weather severely impacting agriculture and winemaking, glacial melting causing an increase in natural disasters, and deadly weather events like the Black Sea storm Bettina increasing in frequency.

However, climate change had only infrequently appeared in mainstream Georgian discourse prior to the tragic Shovi landslides.

In this context, what does the Georgian public think about climate change?

Data from a newly released CRRC Georgia poll suggest that an overwhelming majority of Georgians consider climate change important, and most believe that they have personal experience of climate changing in their area.

While few considered climate change one of the primary issues that Georgia faced, almost all believed that climate change was happening.

To assess the relative importance of climate change, the surveyed public was asked to identify the top issues the country faced, and allowed to name two. As in most surveys, economic concerns prevailed, with 21% naming the economy and 18% naming poverty as Georgia’s top issues. In contrast, 2% named climate change and 5% named environmental protection. In total, 6% named one or the other, as some respondents named both climate change and environmental protection.

While the data suggests that climate change is not seen as a high priority issue, it also shows that the public does nonetheless consider it to be significant.

When asked how important or unimportant the issue was, 90% considered it to be important (31%) or very important (59%). The data paints a similar picture for how concerned the public is about climate change — 80% of the public is worried (42%) or very worried (38%) about climate change.

While there is a high degree of sympathy towards the issue, the public is relatively unclear about the root causes of climate change. Only a third of Georgians (32%) believe that climate change is primarily driven by human action, while 42% believe it is partially natural and partially human driven. One in five (21%) believe that climate change is primarily driven by natural causes.

While the primary cause of climate change is less than clear to the public, there is consensus on climate change being real: only 1% of respondents reported the belief that climate change is not happening at all.

The strong belief in climate change may be connected to a high prevalence of people reporting seeing the effects of climate change in their communities, and that they have experienced weather events that they take as proof of climate change.

Three quarters (75%) of the surveyed public reported that there have been changes in their local weather patterns, and 74% agreed with the statement that ‘I have personally experienced unusual weather that I feel is clear proof of climate change’.

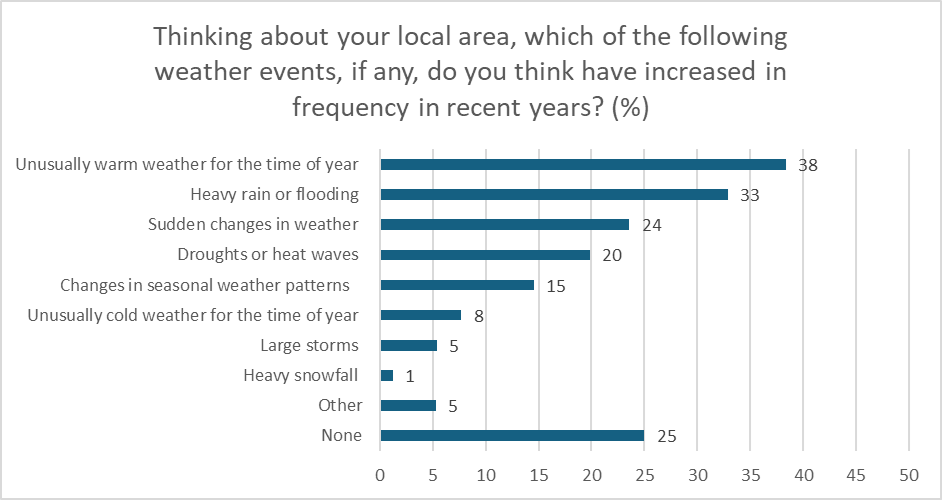

Regarding the specific weather events that people had noticed, unusually warm weather for the time of year, heavy rains and flooding, and sudden changes in weather topped the list. In contrast, less than 10% of respondents named heavy snowfall, large storms, and unusually cold weather.

The above data shows that while climate is not a primary concern to the Georgian public it does matter to them, with people aware of the changing climate in their communities.

This article was written by Dustin Gilbreath, a non-resident senior fellow at CRRC Georgia. It is based on a new report, available here.

Dustin works as a polling consultant for climate change-related organisations, as disclosed on his LinkedIn profile. The views within this article reflect the views of the author alone, and do not necessarily reflect the views of CRRC Georgia, any related entity, or any entity which Dustin works for.